O Laboratório de Aplicações de Desinformação (Disinfo App Lab ou DAL) é uma iniciativa sem fins lucrativos, liderada pela Fundação para Inovação Digital (FID), com uma equipe de pesquisa internacional de 9 professores em 6 países, sediada em Gatineau, Quebec, Canadá.

A equipe se concentra em aplicações de Inteligência Artificial (IA) para ajudar a detectar e monitorar um tipo muito específico de desinformação: falsas alegações contra políticos em suas funções de governança (por exemplo, corrupção, suborno, nepotismo, etc.). Notícias falsas, sejam elas em texto ou multimídia, podem ter como alvo projetos do setor público com o objetivo de manchar uma iniciativa governamental que, de outra forma, funcionaria bem. Isso gera dúvidas e leva ao cancelamento de projetos ou programas, privando os cidadãos de serviços e impedindo o desenvolvimento nacional.

- Consulte este resumo (2025-11-12), a presentation in Enblish ou une présentation en français.

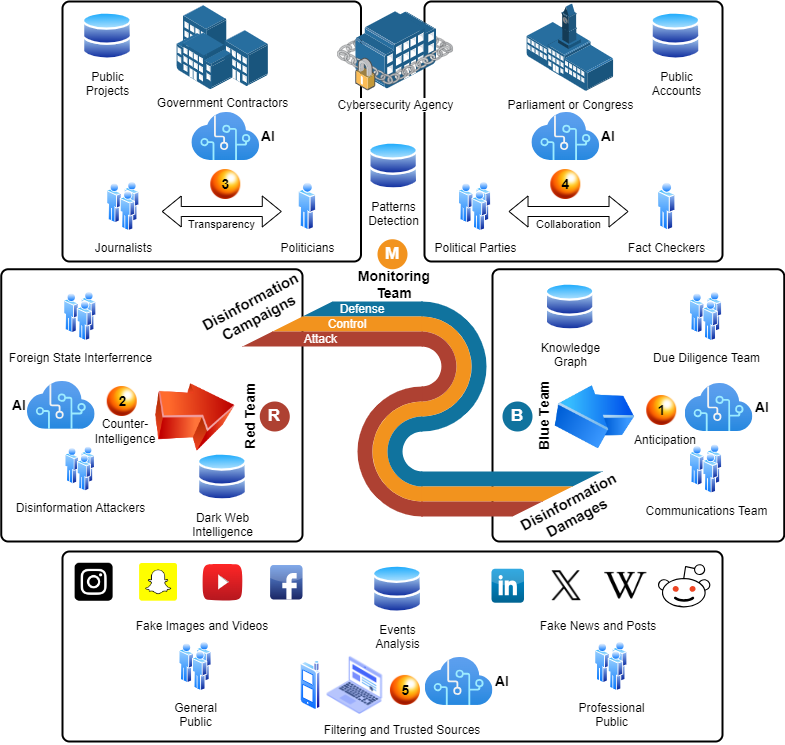

O diagrama abaixo mostra a ampla gama de atores, informações e tecnologias envolvidas. Identificamos 5 áreas: (1) equipe azul no combate aos danos causados pela desinformação; (2) equipe vermelha na execução de campanhas de desinformação; (3) projetos governamentais alvos; (4) parlamentos onde os ataques estão impactando; (5) público para o qual a desinformação é distribuída. As agências de segurança cibernética e as equipes de monitoramento estão “no meio”.

Ao adotar uma perspectiva de “campanha completa”, o monitoramento de desinformação pode fornecer pelo menos cinco funcionalidades principais: (1) antecipação dos “próximos eventos” em uma cadeia de notícias falsas, de modo a ajudar as equipes azuis a combater de forma mais estratégica; (2) contrainteligência sobre atores da dark web para dissuadir e desarmar; (3) aumento da transparência, vinculando informações de projetos públicos com ações de políticos e notícias formais; (4) colaboração entre partidos políticos e sua rede de verificadores de fatos, garantindo que nenhuma informação falsa seja propagada nos parlamentos ou usada para a tomada de decisões; (5) sistemas de filtragem e fontes confiáveis para apoiar os usuários finais, sejam eles o público em geral ou profissionais.

A partir de 2024, a equipe está coletando dados de estudos de caso sobre diversos casos de corrupção, alguns em que as alegações se provaram verdadeiras e outros falsas. Além disso, a equipe desenvolverá novas ontologias e grafos de conhecimento (KGs) para ajudar a modelar campanhas de desinformação e padrões de ataque, que serão então integrados em Modelos de Linguagem Ampliada (LLMs) com grafos. Por fim, um conjunto de casos de uso será desenvolvido, em colaboração com especialistas em comunicação política, que ajudarão a identificar a melhor forma de utilizar a IA, especialmente KGs e LLMs, para superar o impacto da desinformação em seu trabalho e garantir uma due diligence mais rápida e precisa para solucionar alegações de corrupção contra políticos.